A Deep Dive Into OpenAI’s Assistants API

If you’re wondering how to get started with Python, this is the article for you.

At their first developer conference, OpenAI released a whole suite of technologies for developers, including the new Assistants API. This post takes a deep dive into this new API endpoint.

Before the release of this API, developers faced a few challenges in building apps:

- Managing limited context window

- Managing prompts

- Extending capabilities of the model

- Extending knowledge of the model with Retrieval Augmented Generation (RAG)

- Computing and storing embeddings

- Implementing semantic search

Developers had to do all that themselves. That’s why frameworks such as LangChain are so popular(⭐️ 68k GitHub stars time of writing) — because they abstract most of this for us.

Again, while frameworks like LangChain provided ways to achieve this, there is a lot to handle to get a custom assistant experience. For example, if you wanted to create an app that answers questions over your PDF files, while LangChain makes this easy, it still takes some effort on your end.

Now, OpenAI will just take care of that for you. Same goes with the code interpreter, you get the idea. So the release of this API provides developers the ability to create truly powerful assistants.

The Assistants API includes persistent threads, so [developers] don’t have to figure out how to deal with long conversation history, built in retrieval, code interpreter( a working Python interpreter in a sandbox environment), and the improved function calling — Sam Altman

Developers now have been given access to the capabilities behind ChatGPT. Building truly state of the art assistants is has never been easier. Behind this super power is built on top of three building blocks that the API exposes to developers.

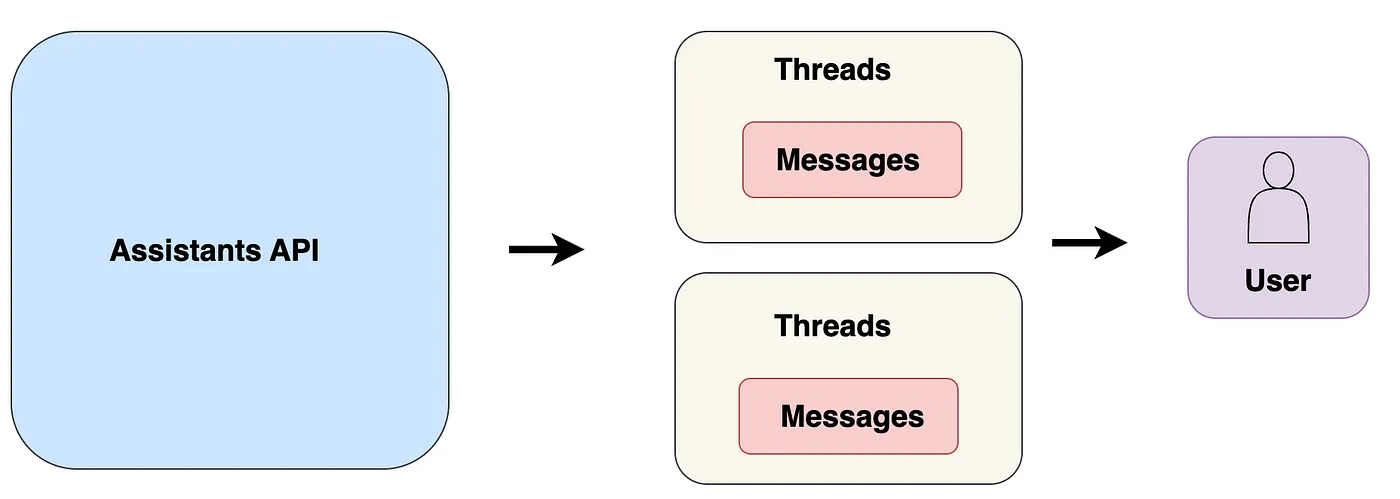

Assistants API has three building blocks

Let’s explore these building blocks.

- The Assistant: This primitive allows us to give instructions as to what how the assistant should behave. This is also where we specify what models and tools the assistant has access to when answering user queries.

- Threads: Threads represent a conversation between your users and the assistant, where the history of the conversation is being tracked within the thread.

- Messages: Messages are simply posts between the user and the assistant

In addition these API primitives, we also have the option of powering our assistant with tools to extend their capabilities and knowledge:

- Code interpreter: This tool gives the model powering our assistant the ability to write and run code in a sandboxed safe environment. This allows our assistant to do math, run code and even process files and generate charts for you.

- Retrieval: We can now augment the assistant with knowledge not included in the models. As developers, we can upload external knowledge to the assistant, for example a research laboratory might upload internal experiment protocols for researchers to follow. In addition, at the conversation level, users can also upload external files for the assistant to take into account for that specific thread.

- Function calling: We’re not only limited to the built-in tools that come with this API. We can define custom functions to the model and the model will select the most relevant function based on the user’s query.

When you first want to try out the assistant API programmatically, it can be a bit confusing to get your head around how things work conceptually. That’s what this post aims to achieve. We’ll create a simple programming assistant to help us develop a mental model of how things work in this API.

Imports

To start things off, we import all the necessary modules we’ll need. Note, it’s important you’re using the latest version of OpenAI’s Python SDK (run pip install — upgrade openai to upgrade)

import os

from openai import OpenAI, AsyncOpenAI

from dotenv import load_dotenv

import asyncio

import time

# env variables

load_dotenv()

my_key = os.getenv('OPENAI_API_KEY')

# OpenAI API

client = AsyncOpenAI(api_key=my_key)

Create and deploy the assistant

First we create an assistant and deploy it to OpenAI’s servers. Once the assistant has been hosted, you get back an assistant object and the id associated with it for later use.

async def create_assistant():

# Create the assistant

assistant = await client.beta.assistants.create(

name="Coding Mate",

instructions="You are a programming support chatbot. Use your knowledge of programming languages to best respond to user queries.",

model="gpt-4-1106-preview",

tools=[{"type": "code_interpreter"}],

#file_ids=[file.id]

)

return assistant

This is important because it means we now have a stateful API. Before the Assistants API, if we wanted to use these instructions repeatedly, we would have to send them on every single API request to OpenAI. Now, we just create my assistant once and the instructions are stored forever

If you check your OpenAI developer dashboard, you can find this assistant your assistants tab.

Add massages to thread

Threads are one session between your user and your application. Much like the ChatGPT works, a user wants to start a conversation, so they click on a New chat button, which creates a new thread.

Once the user has finished typing and they submit their query, we need a way to add their message to the thread. So we define a function that will add our messages to the thread.

Remember, the idea is that every conversation or dialogue will have its own thread. OpenAI will host this thread for you so that your assistant will have built-in memory of past messages in the conversation history.

async def add_message_to_thread(thread_id, user_question):

# Create a message inside the thread

message = await client.beta.threads.messages.create(

thread_id=thread_id,

role="user",

content= user_question

)

return message

Get response back from assistant

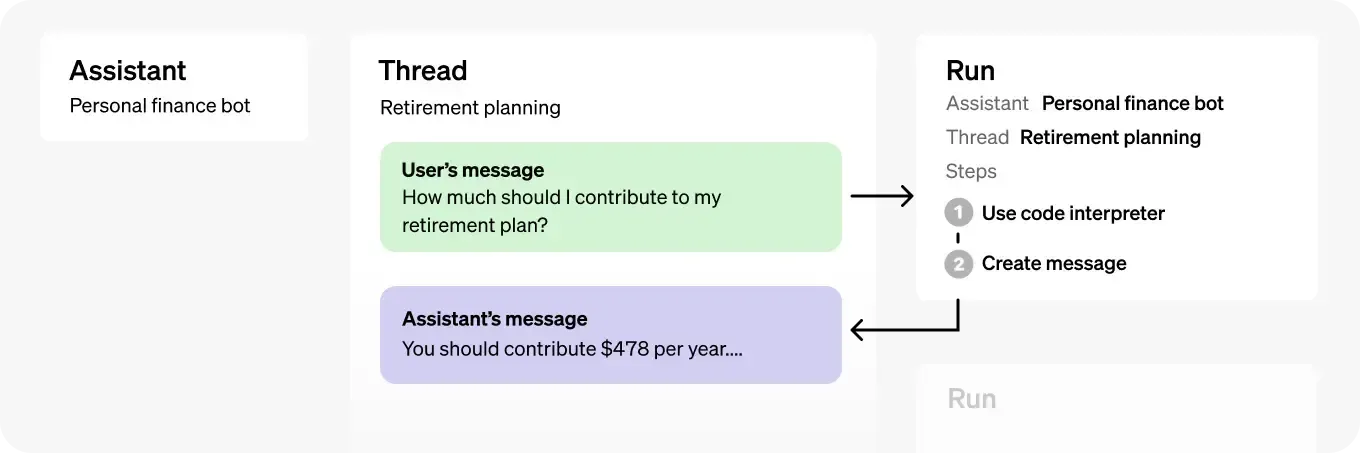

Now, simply adding a message to the thread doesn’t trigger our assistant to answer our message. For that, we need to run the assistant, which is equivalent to telling our assistant: “hey assistant (with a particular id), I’ve added a new message to our conversation(i.e thread.id), and I want you to answer it”.

This will trigger the Assistant to read the Thread and decide if it needs to call tools or simply use the model to respond to our message.

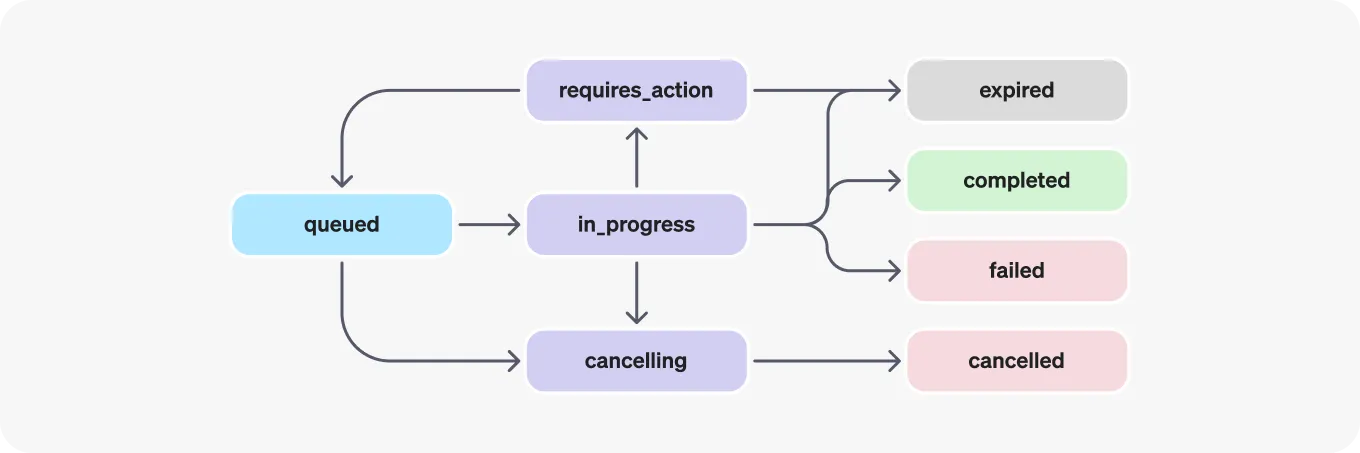

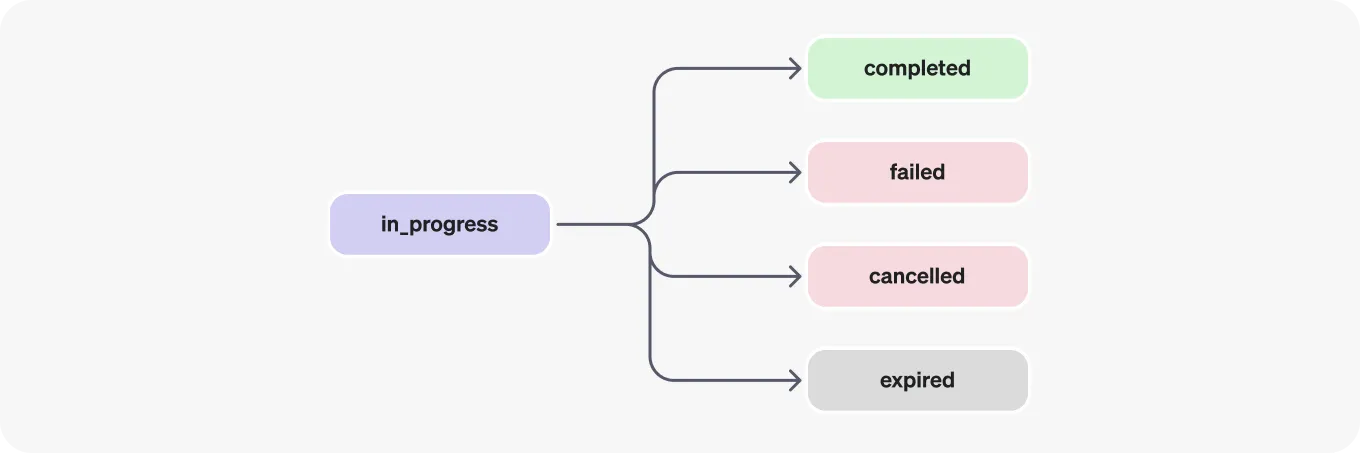

When we run the assistant, the resulting Run object goes through a cycle, from the moment it is created until it has completed. The first stage is when it is placed a queue, from here it will move onto the in_progress stage, and finally it will be completed.

It’s important to always have this cycle in mind because when a Run is in_progress and not completed, the Thread is locked. This means we can’t create new messages or run the assistant until the status has reached the completed stage.

That’s why in our get_answer() function below, we’re creating a Run and waiting until it has completed before attempting to retrieve the messages returned by the assistant.

async def get_answer(assistant_id, thread):

print("Thinking...")

# run assistant

print("Running assistant...")

run = await client.beta.threads.runs.create(

thread_id=thread.id,

assistant_id=assistant_id

)

# wait for the run to complete

while True:

runInfo = await client.beta.threads.runs.retrieve(thread_id=thread.id, run_id=run.id)

if runInfo.completed_at:

# elapsed = runInfo.completed_at - runInfo.created_at

# elapsed = time.strftime("%H:%M:%S", time.gmtime(elapsed))

print(f"Run completed")

break

print("Waiting 1sec...")

time.sleep(1)

print("All done...")

# Get messages from the thread

messages = await client.beta.threads.messages.list(thread.id)

message_content = messages.data[0].content[0].text.value

return message_content

And with that, we have everything we need to create a main() function that will bring everything together.

The main function to run our assistant

We’ll make a few additions inside this main() function. For debugging purposes, a class of colours is included to make easier to check we’re always on the same thread and assistant.

async def main():

# Colour to print

class bcolors:

HEADER = '\033[95m'

OKBLUE = '\033[94m'

OKCYAN = '\033[96m'

OKGREEN = '\033[92m'

WARNING = '\033[93m'

FAIL = '\033[91m'

ENDC = '\033[0m'

BOLD = '\033[1m'

UNDERLINE = '\033[4m'

# Create assistant and thread before entering the loop

assistant = await create_assistant()

print("Created assistant with id:" , f"{bcolors.HEADER}{assistant.id}{bcolors.ENDC}")

thread = await client.beta.threads.create()

print("Created thread with id:" , f"{bcolors.HEADER}{thread.id}{bcolors.ENDC}")

while True:

question = input("How may I help you today? \n")

if "exit" in question.lower():

break

# Add message to thread

await add_message_to_thread(thread.id, question)

message_content = await get_answer(assistant.id, thread)

print(f"FYI, your thread is: , {bcolors.HEADER}{thread.id}{bcolors.ENDC}")

print(f"FYI, your assistant is: , {bcolors.HEADER}{assistant.id}{bcolors.ENDC}")

print(message_content)

print(f"{bcolors.OKGREEN}Thanks and happy to serve you{bcolors.ENDC}")

Here’s the full code that we’ll be running. Assuming your file is named main.py , you can run the program from your terminal by running python main.py.

import os

from openai import OpenAI, AsyncOpenAI

from dotenv import load_dotenv

import asyncio

import time

# env variables

load_dotenv()

my_key = os.getenv('OPENAI_API_KEY')

# OpenAI API

client = AsyncOpenAI(api_key=my_key)

async def create_assistant():

# Create the assistant

assistant = await client.beta.assistants.create(

name="Coding Tutor",

instructions="You are a programming support chatbot. Use your knowledge of programming languages to best respond to user queries.",

model="gpt-4-1106-preview",

tools=[{"type": "code_interpreter"}],

#file_ids=[file.id]

)

return assistant

async def add_message_to_thread(thread_id, user_question):

# Create a message inside the thread

message = await client.beta.threads.messages.create(

thread_id=thread_id,

role="user",

content= user_question

)

return message

async def get_answer(assistant_id, thread):

print("Thinking...")

# run assistant

print("Running assistant...")

run = await client.beta.threads.runs.create(

thread_id=thread.id,

assistant_id=assistant_id

)

# wait for the run to complete

while True:

runInfo = await client.beta.threads.runs.retrieve(thread_id=thread.id, run_id=run.id)

if runInfo.completed_at:

# elapsed = runInfo.completed_at - runInfo.created_at

# elapsed = time.strftime("%H:%M:%S", time.gmtime(elapsed))

print(f"Run completed")

break

print("Waiting 1sec...")

time.sleep(1)

print("All done...")

# Get messages from the thread

messages = await client.beta.threads.messages.list(thread.id)

message_content = messages.data[0].content[0].text.value

return message_content

if __name__ == "__main__":

async def main():

# Colour to print

class bcolors:

HEADER = '\033[95m'

OKBLUE = '\033[94m'

OKCYAN = '\033[96m'

OKGREEN = '\033[92m'

WARNING = '\033[93m'

FAIL = '\033[91m'

ENDC = '\033[0m'

BOLD = '\033[1m'

UNDERLINE = '\033[4m'

# Create assistant and thread before entering the loop

assistant = await create_assistant()

print("Created assistant with id:" , f"{bcolors.HEADER}{assistant.id}{bcolors.ENDC}")

thread = await client.beta.threads.create()

print("Created thread with id:" , f"{bcolors.HEADER}{thread.id}{bcolors.ENDC}")

while True:

question = input("How may I help you today? \n")

if "exit" in question.lower():

break

# Add message to thread

await add_message_to_thread(thread.id, question)

message_content = await get_answer(assistant.id, thread)

print(f"FYI, your thread is: , {bcolors.HEADER}{thread.id}{bcolors.ENDC}")

print(f"FYI, your assistant is: , {bcolors.HEADER}{assistant.id}{bcolors.ENDC}")

print(message_content)

print(f"{bcolors.OKGREEN}Thanks and happy to serve you{bcolors.ENDC}")

asyncio.run(main())

Here’s an example of the output we get.

Created assistant with id: asst_fmkEuFDGorNdIXyJDfhyzISr

Created thread with id: thread_UmlRWqkY8XTURPle0QMowRoq

How may I help you today?

How do I print a message in Python?

Thinking...

Running assistant...

Waiting 1sec...

Waiting 1sec...

Run completed

All done...

FYI, your thread is: , thread_UmlRWqkY8XTURPle0QMowRoq

FYI, your assistant is: , asst_fmkEuFDGorNdIXyJDfhyzISr

In Python, you can print a message using the `print()` function. You can pass the message you want to display as a string argument to the function. Here's an example:

print("Hello, world!")

This line of code will output:

Hello, world!

Would you like to see this in action by running an example?

How may I help you today?