Extracting Structured Data From Documents with GPT-4 Turbo

A reliable approach to getting structured data from Large Language Models.

📚👨🏾💻What you’ll learn:

- Extracting data from PDF documents using GPT-4 Turbo

- Chaining multiple LLM calls

When OpenAI released their GPT-4 Vision, this introduced a layer of advanced AI intelligence, empowering applications with the ability to accurately interpret and analyse visual data such as resumes.

Their latest GPT-4 Turbo with Vision now enables the use of JSON mode and function calling. This means that we no longer have to pray to the AI gods that the LLM returns the correct data.

We simply instruct the LLM to output the data in a particular format. When we need to run our code in production, this idea of structured prompting become

Once we have this unstructured data into native types that are appropriate for a variety of programmatic use cases, the sky is the limit in terms of the type of applications we build.

What we’re building: Resume Parser and Cover Letter Drafter

Suppose you’re prototyping a job search application that leverages AI to automate the job search process for your users.

In this tutorial, we’ll build a simple resume parser will accept a resume ( in PDF format) from a user, extract details from the uploaded resume and forward these results to another function that will draft a cover letter using these details.

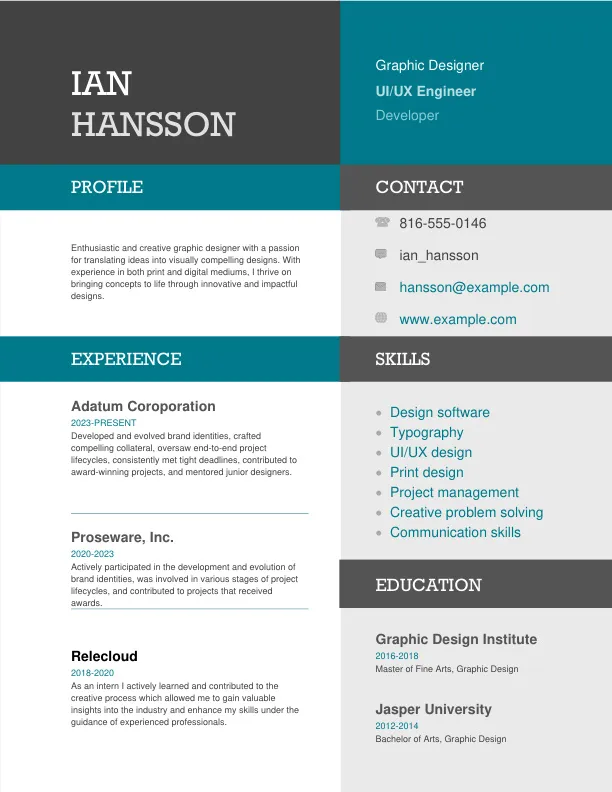

Here’s the example resume we’ll be using throughout this tutorial.

The code

To get started, let’s import all the dependencies we’ll need.

import base64

import os

from enum import Enum

from io import BytesIO

from typing import Literal, Optional, List, Annotated

from pathlib import Path

from tempfile import TemporaryDirectory

import json

import fitz

# Instructor is powered by Pydantic, which is powered by type hints. Schema validation, prompting is controlled by type annotations

import instructor

import matplotlib.pyplot as plt

import pandas as pd

from IPython.display import display

from PIL import Image

from openai import OpenAI

from pydantic import BaseModel, Field

from langsmith.run_helpers import traceable # Allows us to trace without langchain

Defining the data structures

This is where we define how we want the model to output the data. Instead of simply wishing that the LLM returns the right data from our resume, we can define Pydantic models that representing the output structure we want out.

First, we define the different sections of the resume we anticipate every resume should have ( About, Skills, Experience etc). The great thing about this approach is that, even if the resume doesn’t quite define these sections as we have, the LLM will be intelligent enough to figure out what’s what.

These different sections will then makeup the final CompleteResume, which can be included

into our API call as the output format.

Note that these Pydantic models are simple for demonstration purposes. Feel free to extend and customise them as you need.

class AboutSection(BaseModel):

"""Defines the about section of a resume"""

name: str = Field(..., description="The name of the person")

summary: str = Field(..., description="The summary of the person")

title: Optional[str] = Field(..., description="The title of the person")

location: Optional[str] = Field(..., description="The location of the person")

image: Optional[str] = Field(..., description="The image of the person")

class ContactSection(BaseModel):

"""Defines the contact section of a resume"""

email: str = Field(..., description="The email of the person")

phone: str = Field(..., description="The phone of the person")

linkedin: Optional[str] = Field(..., description="The linkedin of the person")

github: Optional[str] = Field(..., description="The github of the person")

website: Optional[str] = Field(..., description="The website of the person")

class ExperienceSection(BaseModel):

"""Defines the experience section of a resume"""

title: str = Field(..., description="The title of the position")

company: str = Field(..., description="Name of the company")

location: str = Field(..., description="The location of the company")

start_date: str = Field(..., description="The start date of the experience")

end_date: str = Field(..., description="The end date of the experience")

description: str = Field(..., description="The description of the person")

class EducationSection(BaseModel):

"""Defines the education section of a resume"""

school: str = Field(..., description="Name of the school")

degree: str = Field(..., description="Name of the degree")

location: Optional[str] = Field(..., description="The location of the education")

start_date: str = Field(..., description="The start date of the education")

end_date: str = Field(..., description="The end date of the education")

description: str = Field(..., description="The description of the education")

class CompleteResume(BaseModel):

"""Defines the complete resume"""

about: AboutSection = Field(..., description="The about section of the resume")

contact: ContactSection = Field(..., description="The contact section of the resume")

education: List[EducationSection] = Field(..., description="The education section of the resume")

experience: List[ExperienceSection] = Field(..., description="The experience section of the resume")

Document Preprocessing

Great. With our structured output format defined, we’re ready to feed our resume to the LLM. While you can have your resume in any format, let’s assume that most, will be PDFs.

This means we have to do some preprocessing before we can proceed, because GPT-4 Turbo works with images in base64 format to extract information.

So we’ll define some helper functions that will help us convert a PDF file into a base64 image.

What is base64?🤔: Read this

# Function to encode the image as base64

def encode_image(image_path: str):

# check if the image exists

if not os.path.exists(image_path):

raise FileNotFoundError(f"Image file not found: {image_path}")

with open(image_path, "rb") as image_file:

return base64.b64encode(image_file.read()).decode('utf-8')

# Function to convert a single page PDF page to a JPEG image

def convert_pdf_page_to_jpg(pdf_path: str, output_path: str, page_number=0):

if not os.path.exists(pdf_path):

raise FileNotFoundError(f"PDF file not found: {pdf_path}")

doc = fitz.open(pdf_path)

page = doc.load_page(page_number) # 0 is the first page

pix = page.get_pixmap()

# Save the pixmap as a JPEG

pix.save(output_path)

def display_img_local(image_path: str):

img = Image.open(image_path)

display(img)

For your own sanity, you can check if you’re able to successfully convert your PDF file to a JPG.

And now we define parse_resume(), a function that will take a PDF file as input, preprocess it using the above defined helper tools, then ask GPT-4 to extract information about it in the specified output format (or response model). 😊🚀

pdf_path = "YOUR_PATH_HERE"

resume_jpg_path = "YOUR_PATH_HERE"

def parse_resume(

pdf_path: Annotated[str, "Path to the PDF file of the resume."] = pdf_path,

output_image_path: Annotated[str, "Path where the converted image should be saved."] = resume_jpg_path,

page_number: Annotated[int, "The page number of the PDF to convert."] = 0

) -> Annotated[str, "A JSON string of the resume data."]:

"""

Parses the resume from a PDF file, converts it to an image, encodes the image,

and returns the JSON string of the resume data.

"""

# Convert PDF page to JPG

convert_pdf_page_to_jpg(pdf_path, output_image_path, page_number)

# Encode the image

base64_img = encode_image(output_image_path)

# Proceed with parsing the resume using the encoded image

response = instructor.from_openai(OpenAI()).chat.completions.create(

model='gpt-4-turbo',

response_model=CompleteResume,

messages=[

{

"role": "user",

"content": 'Analyze the given resume and very carefully extract the information.',

},

{

"role": "user",

"content": [

{

"type": "image_url",

"image_url": {

"url": f"data:image/jpeg;base64,{base64_img}"

}

},

],

}

],

)

result = response.model_dump_json(indent=2)

return result

By defining the response as a Pydantic model, we can leverage the response_model argument to instruct the model to generate the desired output. This is a powerful feature that allows us to generate structured data from any language model!

Time to test this function:

# Test the parse_resume function

result = parse_resume()

print(result)

{

"about": {

"name": "Ian Hansson",

"summary": "Enthusiastic and creative graphic designer with a passion for translating ideas into visually compelling designs. With experience in both print and digital mediums, I thrive on bringing concepts to life through innovative and impactful designs.",

"title": "Graphic Designer",

"location": null,

"image": null

},

"contact": {

"email": "hansson@example.com",

"phone": "816-555-0146",

"linkedin": null,

"github": "ian_hansson",

"website": "www.example.com"

},

"education": [

{

"school": "Graphic Design Institute",

"degree": "Master of Fine Arts, Graphic Design",

"location": null,

"start_date": "2016",

"end_date": "2018",

"description": ""

},

{

"school": "Jasper University",

"degree": "Bachelor of Arts, Graphic Design",

"location": null,

"start_date": "2012",

"end_date": "2014",

"description": ""

}

],

"experience": [

{

"title": "Graphic Designer",

"company": "Adatum Corporation",

"location": " ",

"start_date": "2023",

"end_date": "PRESENT",

"description": "Developed and evolved brand identities, crafted compelling collateral, oversaw end-to-end project lifecycles, consistently met tight deadlines, contributed to award-winning projects, and mentored junior designers."

},

{

"title": "Graphic Designer",

"company": "Proseware, Inc.",

"location": " ",

"start_date": "2020",

"end_date": "2023",

"description": "Actively participated in the development and evolution of brand identities, was involved in various stages of project lifecycles, and contributed to projects that received awards."

},

{

"title": "Intern Graphic Designer",

"company": "Relecloud",

"location": " ",

"start_date": "2018",

"end_date": "2020",

"description": "Actively learned and contributed to the creative process which allowed me to gain valuable insights into the industry and enhance my skills under the guidance of experienced professionals."

}

]

}

With the resume’s data returned to us as JSON string, we can chain this to an additional LLM call as a follow up question. So let’s define another function that will allow us to do just that.

import os

from openai import OpenAI

client = OpenAI(

# This is the default and can be omitted

api_key=os.environ.get("OPENAI_API_KEY"),

)

def follow_up_chat(resume_data, query) -> str:

chat_completion = client.chat.completions.create(

messages=[

{

"role": "user",

"content": f"""You are a helpful assistant tasked with helping users applying for jobs.

A user gives you their resume details in the following JSON format: {resume_data}.

{query}

""",

}

],

model="gpt-4-turbo",)

return chat_completion.choices[0].message.content

And we can now ask our follow up question.

my_query = "Given this resume data, please draft a cover letter for an entry level job as a graphic designer."

resume_data = result

llm_response = follow_up_chat(resume_data, my_query)

print(llm_response)

[Your Address]

[City, State, Zip Code]

[Email Address]

[Phone Number]

[Today’s Date]

[Employer's Name]

[Company Name]

[Company Address]

[City, State, Zip Code]

Dear [Employer's Name],

I am writing to express my interest in the entry-level Graphic Designer position as advertised. With a Master of Fine Arts in Graphic Design from the Graphic Design Institute and a Bachelor of Arts in Graphic Design from Jasper University, I am equipped with the formal training and practical experience required to contribute effectively to your team.

Throughout my career, I have demonstrated a unique ability to blend creativity and efficiency in designing compelling visuals for both print and digital media. My recent role as a Graphic Designer at Adatum Corporation involved developing brand identities, creating persuasive collateral, and managing the full lifecycle of design projects, which honed my skills in delivering snappy solutions under tight deadlines. The projects I have contributed to have not only met client expectations but have also received industry recognition.

At Proseware, Inc, I further deepened my design expertise by actively participating in various stages of project lifecycles, from conceptualization to execution. This experience reaffirmed my passion for crafting visual stories that elevate and articulate brand narratives effectively.

As an intern at Relecloud, where I began my professional journey, I was immersed in the creative process under the mentorship of seasoned designers, gaining invaluable insights and enhancing my skill set significantly. This role was foundational in shaping my approach to design as an engaging, dynamic interaction with content to produce visually appealing and strategically sound outcomes.

I am particularly drawn to [Company Name] because of your commitment to [mention any known company values or projects], an area I am passionate about and have experience in. Joining a forward-thinking company like yours would not only provide me an excellent platform to apply my skills but also fulfill my passion for innovative graphic design.

My resume is attached for your review, and I look forward to the possibility of discussing this exciting opportunity with you. Thank you for considering my application. I am eager to bring my background in graphics design and my creative drive to [Company Name] as your new Graphic Designer.

Warm regards,

Ian Hansson

And there you have it. We have seen how to reliably extract structured data from a resume. We also got a taste of how we might go about chaining multiple LLM calls together, setting the input of one LLM call to be the output of another LLM call.

Hopefully this gives you a good foundation to build your own pipelines for more interesting applications.

Stay tuned for a follow up tutorial covering how apply this to agents. Until next time 👋🏾. Keep learning!

References

How to use GPT-4 Vision with Function Calling (OpenAI Cookbook)